Jotting down a roadmap of ideas I want to explore in this space in the near future, so they don’t get lost or stuck in my drafts. All of these have something to do with how LLMs can be sculpted and explored as a new kind of material:

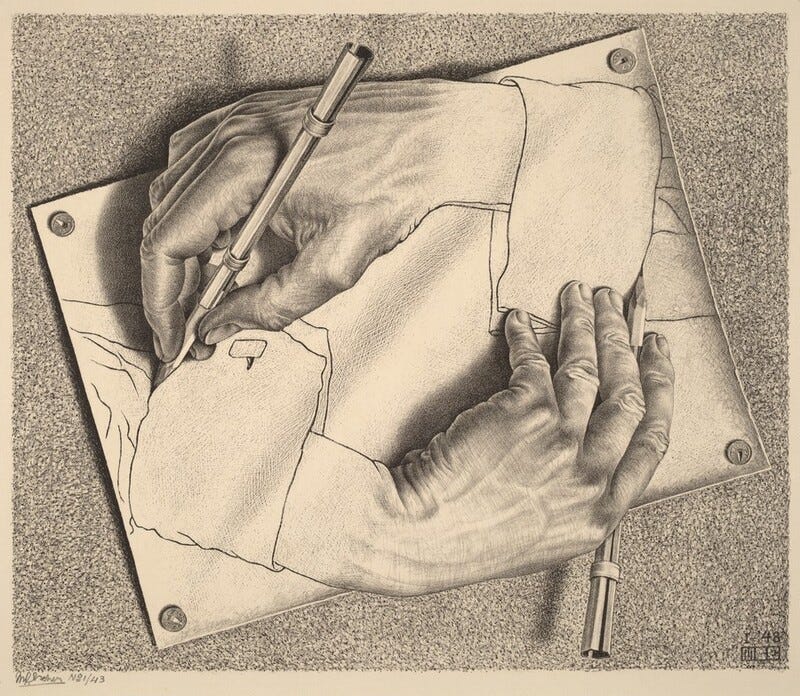

Collage is the AI-native creative process

Collage seems like the right way to think about using AI creatively. Synthesize infinite artifacts (music, writing, images, video) then recombine them in interesting ways. The importance of a piece isn’t in the primary materials it’s made of, but how they’re put together. After AI commodifies the raw material, what’s left is combination.

MATEOS (markdown-as-engine-of-application-state)

HATEOS (hypermedia-as-the-engine-of-application-state) is an architectural pattern where “A user-agent makes an HTTP request to a REST API through an entry point URL. All subsequent requests the user-agent may make are discovered inside the response to each request.” The client just needs to be able to interpret the hypermedia sent back from the server, and use it to guide its next request. What if we applied the same principles to a server-client architecture where both server and client use LLMs to communicate via markdown? A server sends an initial markdown document to the client describing its affordances. The client uses a model to understand this initial document and decide on next actions. The client model produces a markdown “prompt” to send back to the server. The server model produces another markdown response and sends it back to the client. And so on. Markdown becomes the engine of application state.

Implementing .filter() .map() and .reduce() with language models

A programming model I’m excited about is applying prompts to collections of data in a way that mirrors .filter() .map and .reduce(). For .filter() and .map(), I imagine that the callback applied to each item of the input collection is a prompt, and each item in the output collection is the result of the prompt. For .reduce(), the callback is also a prompt. But .reduce() also takes an accumulated value. This would be the response to the last prompt. The response would be composed with a “base prompt” - and together they would prompt the model to generate the next accumulated value.

LLMs are holodecks

Inspired by this post, I want to explore how LLMs can be viewed mainly as simulators-in-text. I think a fruitful analogy for this is the holodeck from Star Trek. You enter a room (run a model) and speak aloud what you want to experience (send a prompt). The result is a simulation-in-text that you can steer. Crucially, there is no “mind” or “agent” behind the simulation. That becomes more obvious in base models which haven’t been RLHF’d. There’s only the simulation itself, produced from the prompt of the user, unfolding in text.

LLMs are a manufacturing technology

Multi-modal LLMs are a way to mass produce media. The change in effort required to produce new media is like the change in effort required to produce new objects when factories and assembly lines were first invented. Many LLMs working together are like hyper-dimensional robot arms in media factories, spinning around in latent space to mass produce text, images, and video. What kind of factories can we build? What kind of media is useful to mass-manufacture? What’s the consequence of flooding the world with mass-manufactured media the same way we flooded the world with industrial objects?

Compositional prompting

How could we build better experiences for composing prompts? Right now the current state of the art seems to be

{{this_type}}of templating language embedded in documents to represent variables in a prompt, plus some many experiences that use a nodes-on-canvas editor for chaining prompts together. What would a full blown programming language or IDE for building and running prompts look like? What’s a better way to express prompts that are composed of multiple documents and data sources? The creators of Cursor explored this with their Prompt-as-JSX library priompt. Maybe building up prompts and fetching data for them could be similar to how we compose a web page out of React components? What’s the best way to express a dependency graph between the different parts of a prompt that need to reactively update in response to changes? Maybe its something like React’s rendering model?

Moving from one-off chat UX to multi-threaded multiplayer conversations

What if interacting with models was more like thinking aloud on Twitter? What if you could @claude or pick up a thread wherever you left off at any time, or fork a conversation just by replying to one of the tweets in a reply-chain? What if models didn’t need to stream tokens synchronously, but could post asynchronously to posts on your timeline whenever it made the most sense? What if you could invent new @agents to talk with on the timeline, by tuning system prompts and rules about how they reply and post? What if you did all this in a discord-like server where all your friends, collaborators, and @agents are posting together?

Cooking with recipe books

When coding with LLMs, there’s huge benefit in having documentation that can be fed into context. However, most documentation that’s available has been ported to .md for LLMs recently (see llms.txt). What does LLM-native documentation look like? I think it’s something like a collection of “recipes” that can be fed into context to show the model all the important patterns that it can reproduce when building. These recipes become less explanatory and narrative, since they’re not aimed at a human being. Instead, they become ways to steer the model towards producing the right patterns by bringing relevant recipes into context. Consider a codebase with a bespoke way of doing forms, or API endpoints, or payments integrations. A recipe book that demonstrates all of these code-base specific patterns makes a model much more effective at working idiomatically inside of the codebase. Context7 has created some really cool tools in this direction.

This is so fucking sick