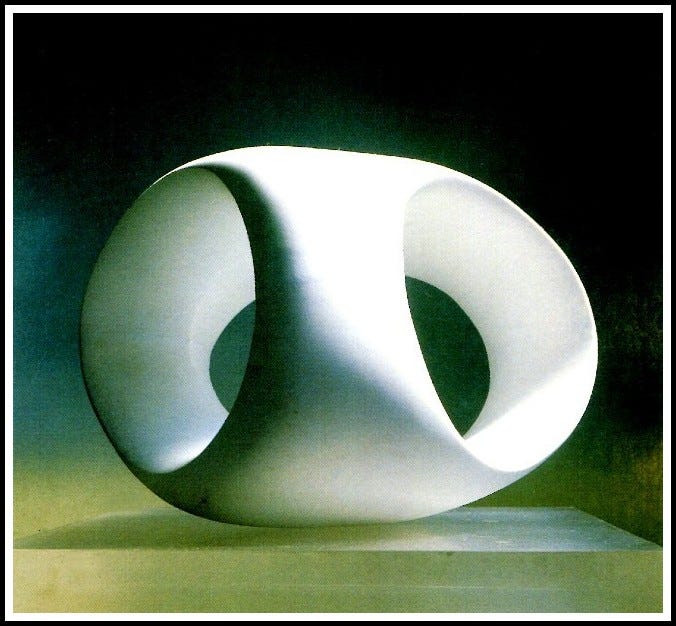

Every material has its own individual qualities. It is only when the sculptor works direct, when there is an active relationship with the material, that the material can take its part in the shaping of an idea.

Until recently, no literate AI existed. Now, models can read and write fluently.

In most sci-fi, literacy implies general intelligence. HAL and Her both speak and listen. As the first literate AI arrives on Earth, we expect AGI to follow.

But LLMs violate sci-fi intuition. They unbundle literacy from other capabilities. LLMs need training and augmentation to exhibit selfhood, agency, and transparent reasoning.

Untrained models don’t exhibit selfhood. AI labs train models to mimic friendly conversational partners. There’s no “I” in LLM without training.

Models don’t act like agents on their own. They require a scaffold of other programs, feedback loops, and prompt engineering to pursue a goal.

Models don’t think legibly. Labs train models to produce “chain of thought” output that mimics thinking aloud. But “thought traces” don’t always faithfully represent how the model processes language.

Analogizing LLMs to sci-fi AI might mislead us. Instead, let’s think of models as a mysterious new computational material. To develop proper intuition, we need to sculpt with this material for hours, watching how it responds to our prompts. If we assume we're working with sci-fi AI, we might miss what this new material can become. Marble doesn’t carve like sandstone.

In the last century, new design followed new materials. The Bauhaus bent tubular steel. Architects poured concrete into curves. Designers wrapped objects in candy-colored plastic. What new forms are hiding inside the material of literate intelligence? It can’t all be chatbots.

"marble doesn't carve like sandstone" 🔥